Machine learning is cool. There is no denying in that. In this post we will try to make it a little uncool, well it will still be cool but you may start looking at it differently. Machine learning is not a black box. It is intuitive and this post is just to convey that.

If I give you this function f(x) = x^2 + log(x) and ask to you tell me what will be f(2), you will first laugh at me and then run away to do something important. This is trivial for you, right? If a function is there that maps inputs to outputs then it is very easy to get the output for any new input.

Machine learning helps you get a function that can map the input to the output. How does it do it? What is this function? We will try to answer such questions in the paragraphs below.

Let us try to answer the above questions using a problem that can be solved using machine learning. Assume, you are a technical recruiter. You have been running a recruitment firm for the last 3 years. Now you being tech savvy, you follow the latest trends in technology and you came to know about machine learning. You understand that machine learning can be used to predict the future given you have data from the past.

You thought, how can I use it to predict the expected salary of a candidate given other factors. The first thing that comes to your mind- do you have the data? And you hear out a pleasant yes!

You have the following data collected at individual level:

-

Age of the candidate

-

Gender of the candidate

-

Number of years of experience

-

Highest level education degree

-

College - Top notch, Average, normal

-

Current salary

-

Sector - IT, Finance, Electronics

-

Salary

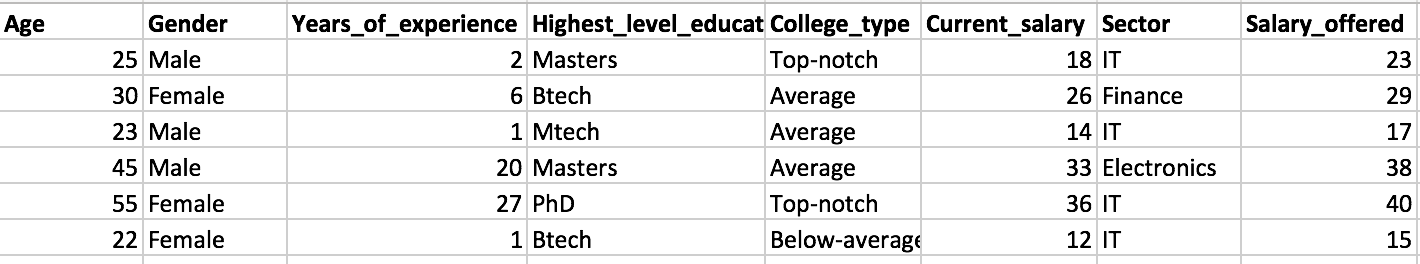

And a few others. For now let us assume we have just these features and we want to predict the expected salary using these features. We have 3 years of data that has approximately 10,000 rows. So your dataset looks something like the below data:

So essentially we have seven independent features, X - age, gender, years of experience, highest level of education, college, current salary, sector and corresponding salary, Y. What we want is next time when we have a candidate, we would obviously have his age, gender, years of experience, and other features. What we won't have is his salary. And, we want to estimate this value.

There would be some function, say f that would map these X to the Y values. How do we find this function? We will use the 3 years of data we have - the training data.

We won't be able to find the actual function, the true function, f because we don't have all of the data in the world. You can't collect the entire dataset available. It is impossible. What we use is a sample of data from the population. And, we use this sample as our training data.

Many a times, there are some factors that can't be captured. The set of independent features that we have captured is not an exhaustive list. There would obviously be other features that will have an impact on the salary.

Say in our example of salary prediction, some of the factors like exclusive and exceptional knowledge on some rare topics may land a candidate exorbitant offers from few of the companies. It is difficult to capture factors like these.

Now we understand why we can't have the true f. So we will try to get an estimate of f, say f^. We want this f^ to be as close to the true f i.e. a proxy for the true function. There would obviously be error in estimating this true function and w want to minimize this error to as low as possible. How do we go about getting this f^, an estimate of the true function, f?

We have the data, remember the 3 years of historical data which contained the X features and the corresponding Y values. This is called the training data and there is a reason why it is called training data. Because we use this data to train the underlying algorithms to get the estimated function f^.

You get that we use training data to get the estimated function, f^. But how do we do it? We try to minimize the error between the true salary, Y and the predicted salary, Y^ from the model. For now, understand that there is a way to minimize this error and get the estimated function.

Now these functions could be a simple one like having a linear relationship between the salary and the features or many a times a complex relationship which is not linear. There are techniques say linear regression or say decision trees that help you get the simple estimate or even a complex one respectively.

Once you have this estimate of function,

f(age, gender, years of experience, highest level of education, college, current salary, sector) ---> salary

you just pass in the X and you should get your Y. There, you have a machine learning model. And you know what you have done - you have just come up with a nice estimate of the true function.

Once you have this estimate, there are other questions that you might want to think over. How good an estimate is this function to the true function? What all assumptions you made to estimate this function? When would this estimate not be a good choice?. I will try to answer these questions in future posts. For now, I hope you get the gist that the essence of machine learning is function estimation.

Did you find the article useful? If you did, share your thoughts in the comments. Share this post with people who you think would enjoy reading this. Let's talk more of data-science.